The Next Wave Of AI Computing

AI chips and the tech sovereignty of Europe, Greek university spin-offs, tech cycles & venture capital, hiring product managers, jobs, events, and more

Welcome back to Startup Pirate, a newsletter about what matters in tech and startups with a Greek twist. Come aboard and join 4,483 others. Still not a subscriber? Here’s what you recently missed:

Find me on LinkedIn or Twitter,

-Alex

The Next Wave Of AI Computing

AI chips, edge computing, and how Axelera AI can help close the tech sovereignty gap of Europe

The idea of artificial intelligence goes back at least as far as Alan Turing, the British mathematician who helped crack coded messages during WWII. “What we want is a machine that can learn from experience,” Turing said in a 1947 lecture in London. A few years later, a game of checkers was the earliest successful AI program. It took a series of booms and busts for AI to make the inroads we see today into facets of human life and for ChatGPT to be the most successful tech launch since the iPhone. Even artificial general intelligence doesn’t sound sci-fi anymore.

The most advanced AI services demand costly computing power — the ability to quickly churn through data and maths of the most intense machine learning workloads. This inevitably creates windfalls for those providing such superpowers. And who sells the shovels in the AI gold rush? The big cloud providers: Amazon, Microsoft, Google. Developers rush into their arms in exchange for powerful compute and data storage. But really, another industry made the gold rush possible in the first place.

Though less buzzy than other technologies, AI chips — hardware dominated by a specialized type of microprocessor called graphics processing unit (GPU) — provide the computing power to make advanced calculations possible. A single chip may house tens of billions of transistors for calculations, each with a gate just a few nanometers wide. NVIDIA pivoted from games and graphics hardware to dominate AI chips, and markets responded. With hundreds of GPUs required to train large language models (LLMs) of billions of parameters, like GPT-3, it’s time to “Dream big and stack GPUs”.

But a raft of companies says new architecture is needed for the fast-evolving AI field. Perhaps also to keep AI from becoming an environmental disaster. Danish researchers calculated that the energy required to train GPT-3 could have the carbon footprint of driving 700,000km, about twice the distance between Earth and the Moon. The key lies within the next-generation AI chips.

Let’s begin.

From Cloud to the Edge

The dawn of cloud computing in the early 2000s gave rise to new offerings (SaaS, IaaS, PaaS) that changed the economics of IT-dependent businesses. Businesses enjoyed limitless compute-on-demand, flexible pricing, and simplified IT processes, as computing capabilities were delivered as a service via the Internet from a distant, global resource (aka large data centres). The internet entered its “Lego era”.

Since then, how we use technology has changed: smartphones, autonomous cars, factory robots, drones, VR headsets, home automation, satellites — physical objects with sensors that exchange data with other devices and systems over the Internet or other communications networks. The worlds of bits and atoms started to converge. Ergo, the volume of data these devices generate surpasses anything we’ve seen before. And for them to be smart, they should process the data they collect, share timely insights, and, if applicable, take appropriate actions.

As we connect billions of devices in our cities, seas, sky, and space to the network, computing power has to move closer to where data is generated — the “edge”. Edge computing transitions networks from highways to and from a central location to something akin to a spider’s web of interconnected, intermediate storage and processing devices. It captures, processes, and analyses data near where data is created, rather than always at a data centre. We gain speed, efficiency, and data privacy; we lose scalability in computing resources. It’s more of an add-on to the cloud, not a replacement.

So what’s edge computing’s killer application?

AI at the Edge

Think of a self-driving car that sees a stoplight turning red and applies the brakes. A fleet of delivery drones that changes landing spots to prevent collisions. Or a facial recognition security system that informs about an incident in real time. Sending back and forth data from the device to the cloud to be processed would take too long. A slow response from such systems could be catastrophic. Lives might be at risk.

We need to move AI to the outer edges of networks. We need Edge AI.

AI algorithms running on devices in the physical world means the system can process data and take appropriate actions in milliseconds without latency or downtime. It also means we need smaller batteries as the devices consume less energy by not sending data to the cloud all the time. This is all possible, given advancements in machine learning and computing infrastructure.

But, as we said, running AI algorithms on small devices has its limits: a limited scale of computation capacity. This is why developing AI happens in the cloud (feed models with data so that the system “learns” everything about the type of data it will analyze, the so-called “training”) and producing actionable results in the edge (the more lightweight task of using a trained model to then interpret something in real-life situations, the “inference”).

Powering the race for Edge AI

AI chips can be largely classified into those for cloud service and edge computing. Even though NVIDIA currently dominates the cloud, we are only in the early innings of edge AI. We need new technological paradigms for such workloads — workloads that don’t enjoy temperature-controlled data centres with terabytes of memory and vast amounts of storage. Enter Axelera AI.

In late 2019, inside Bitfury in Amsterdam (the largest Bitcoin miner outside China), a team of engineers led by Bitfury’s Head of AI, Fabrizio Del Maffeo, went into stealth mode and got to work. They started building software and hardware to accelerate AI at the edge. Fast forward ~ two years later, they spun off from Bitfury and imec, and Axelera AI was born. Shortly after, Evangelos Eleftheriou (CTO), Ioannis Koltsidas (VP of AI Software), and Ioannis Papistas (Senior R&D Engineer) joined the founding team. Since then, the company has raised over $37m and grown to 100+ employees globally, coming from Intel, Qualcomm, Google, IBM, etc.

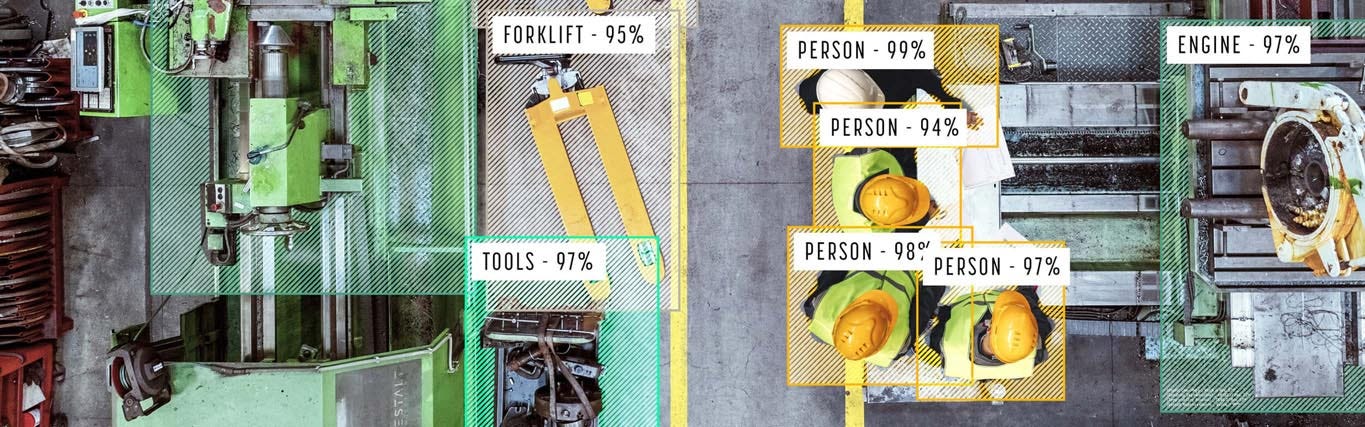

Late last year, Axelera AI launched its first platform, covering hardware and software to empower thousands of AI applications at the edge. Their first use case? Computer vision — a field of AI that trains computers to interpret the visual world and understand what they “see.” The examples mentioned before — the self-driving car, the drone, the facial recognition security system — and many more AI applications in the physical world are powered by computer vision.

For cases where efficiency plays a key role, Axelera AI’s hardware centred around AI chips (Metis AIPU) offers a 10x experience compared to the incumbents. Remember, we talk about running AI algorithms in small objects in the physical world, not large data centres, so power and energy efficiency are critical. I asked Ioannis Koltsidas, the company’s co-founder & VP of AI Software, to learn more:

“In the traditional way of processing data (and AI is all about processing data), the memory and the CPU are separate. Whenever we need a computation, data is moved back and forth from memory to the CPU (the central processing unit of a computer). This has limitations in speed and energy efficiency. At Axelera AI, we follow a radically different approach, more suitable for AI applications in the physical world: in-memory processing. Without getting overly technical, our chips run computer calculations entirely in memory. Our Digital In-Memory Compute engine performs matrix-vector multiplications at constant time (O(1) complexity) and without any intermediate data movement. We have found a way to deliver this in a way that generates high performance with low energy and cost. Such an architecture is perfectly suited for edge applications, where performance density and energy efficiency are critical. The fundamental technology, however, can be applied to other computing environments (e.g. datacenter & cloud).”

But hardware is only part of the story. A platform empowering AI applications cannot thrive without attracting builders and offering a great developer experience. That’s where software comes into play. Ioannis added:

“The average data scientist or machine learning engineer doesn’t have the technical expertise to make AI applications work in edge devices with maximum performance. They train ML models in the cloud and build applications in high-level languages and frameworks, but if they run these at the edge, the performance will be poor. Part of the problem is that there’re so many edge devices (from drones to cameras and cars to smartwatches) with different architectures, platforms, and software stacks. We solve this by providing the Voyager SDK, an end-to-end integrated framework for application development for the Metis AI platform that allows for rapid development. Developers can tap off-the-shelf tools such as Facebook’s PyTorch or Google’s TensorFlow ML frameworks and won’t need expertise in new AI development environments. We also provide an ML optimization toolchain that leverages performance-boosting approaches like graph optimizations and quantization, which compresses AI models with negligible losses in accuracy.”

Chips recently became a hot topic as their supply shortage during the past years made painfully obvious that they’re fundamental building blocks of innovation for the world and key to the success of any nation state. They power from a light switch to a fighter jet or a smartphone. European Chips Act and CHIPS for America Act (legislations to encourage chip production in the EU & US) clearly showed how the technological sovereignty of the West passes by way of chips. Strengthening Europe’s presence in the global AI and chips sectors becomes imperative. And with an HQ in Eindhoven, Netherlands and R&D teams across Europe, including Greece, Axelera AI is perfectly positioned to spearhead this movement. The battle for the future of AI chips has just begun.

Learn more about Axelera AI on their website and look at their open roles across engineering and business.

Startup Jobs

Looking for your next career move? Check out job openings from Greek startups hiring in Greece, abroad, and remotely.

News

Austrian Post acquired a majority stake in Agile Actors, one of Greece's largest software development organisations.

Behavioral marketing platform Wunderkind raised a $76m Series C round.

Blockchain operational intelligence Metrika raised $4m (Series A extension) led by M12, Microsoft's Venture Fund, bringing its total raised to $22m.

Robotics startup AICA, which develops software to make robotic programming more efficient, raised CHF 1.2m.

Last-mile delivery startup Svuum raised funding from Olympia Group.

More announcements for new VC funds to support Greek startups coming up this year.

New Products

Autodesigner by Uizard, Appteum, Save All

Interesting Reads & Podcasts

Thoughts on the spin-off process from Greek universities from Aristos Doxiadis, Partner at Big Pi Ventures (link) and a list of NTUA spin-offs (link).

Aimilios Chalamandaris, Head of Text-to-Speech at Samsung, on founding Innoetics, getting acquired by Samsung, and new tech paradigms (link).

What is a product manager? by Joseph Alvertis (link).

Hiring product managers and formulating the interview process by Manos Kyriakakis, Head of Product & Growth at Simpler (link).

What happened to the API economy? by Kostas Pardalis (link).

On hiring for smarts, passion, and optimism with Alexis Pantazis, Exec Director and co-founder of HellasDirect (link).

When new discoveries challenge Nobel-winning theories by Lefteris Statharas (link).

Card payments 101 by George Karabelas, Principal at VentureFriends (link).

Staking and validators in Ethereum blockchain from Elias Simos, co-founder & CEO of Rated (link).

A brief thread on tech cycles and venture capital:

Events

“Talent Days” by College Link on Mar 10-11

“Bitcoin Miniscript and script templates” by Bitcoin & Blockchain Tech (Athens) on Mar 13

“Turning tax into a tool in founders' hands” by Tech Finance Network on Mar 14

“Angular Athens 16th Meetup” by Angular Athens on Mar 14

“62nd Athens Agile Meetup” by Agile Greece on Mar 15

“Quality dashboards that stick” by BI & Analytics Athens on Mar 16

“UX Greece welcomes Peter Merholz” by UX Greece on Mar 22

“ath3ns meetup at Orfium” by ath3ns | community & web3 on Mar 23

If you’re new to Startup Pirate, you can subscribe below.

Thanks for reading, and see you in two weeks!

P.S. if you’re enjoying this newsletter, share it with some friends or drop a like by clicking the button below ⤵️