From 0 to 2M Users in Generative AI

Democratising product design with AI, top AI and cryptography scientists, edge computing, growth vs M&A, jobs, events, and more

Friends, Happy New Year! For the first edition of 2024, we welcome a Greek founder who will take us through their journey of building in Generative AI for over 2 million users. Below, you can also find jobs, news, resources, events, and more. If you haven’t subscribed, join 5,338 readers by clicking here:

From 0 to 2M Users in Generative AI

The following is a conversation with Ioannis Sintos, co-founder & CIO of Uizard, an AI-powered tool that helps anyone create product designs in minutes. They have over 2M users and thousands of designs generated daily. With Ioanni, we discussed:

the journey building in AI over the past 6+ years

how Uizard democratises product design

Uizard’s underlying technology and technical challenges

building an engine to measure product market fit

the future of Generative AI and product design

Let’s get to it.

Ioanni, fantastic to have you here. Lots to chat about, from searching for product-market fit to technical challenges and democratising product design. You have been building Uizard for a while, long before the recent AI boom. How did it all start?

IS: We have been building Uizard for 6+ years. It all started as a machine learning research project called pix2code in 2017 in Copenhagen, Denmark. Uizard only fully formed as a company in early 2018 after a trip to San Francisco and a hacking session in a Mountain View garage — like a true Silicon Valley cliché. The initial idea and research paper from Tony Beltramelli, co-founder and CEO of Uizard, was that anyone could draw a wireframe on a piece of paper or a whiteboard, and AI could convert this into a digital artefact, democratising UI design and engineering.

We got some initial traction as soon as we published our research paper and proof of concept and then raised our first funding round in May 2018. Since then, we have raised over $18M from Insight Partners, byFounders, LDV Capital, and others. The first years were heavily skewed towards R&D, building our core AI engine and packaging this into a product that would turn wireframes into high-fidelity mockups. Then, the user would use our editor to create interconnections between the screens and produce an interactive prototype. Things looked pretty basic back then, but as they say, "If you're not embarrassed by the first version of your product, you've launched too late".

Fast forward to today, you have built a platform that's used by over 2 million users. Can you walk us through how you enable creators to design in minutes?

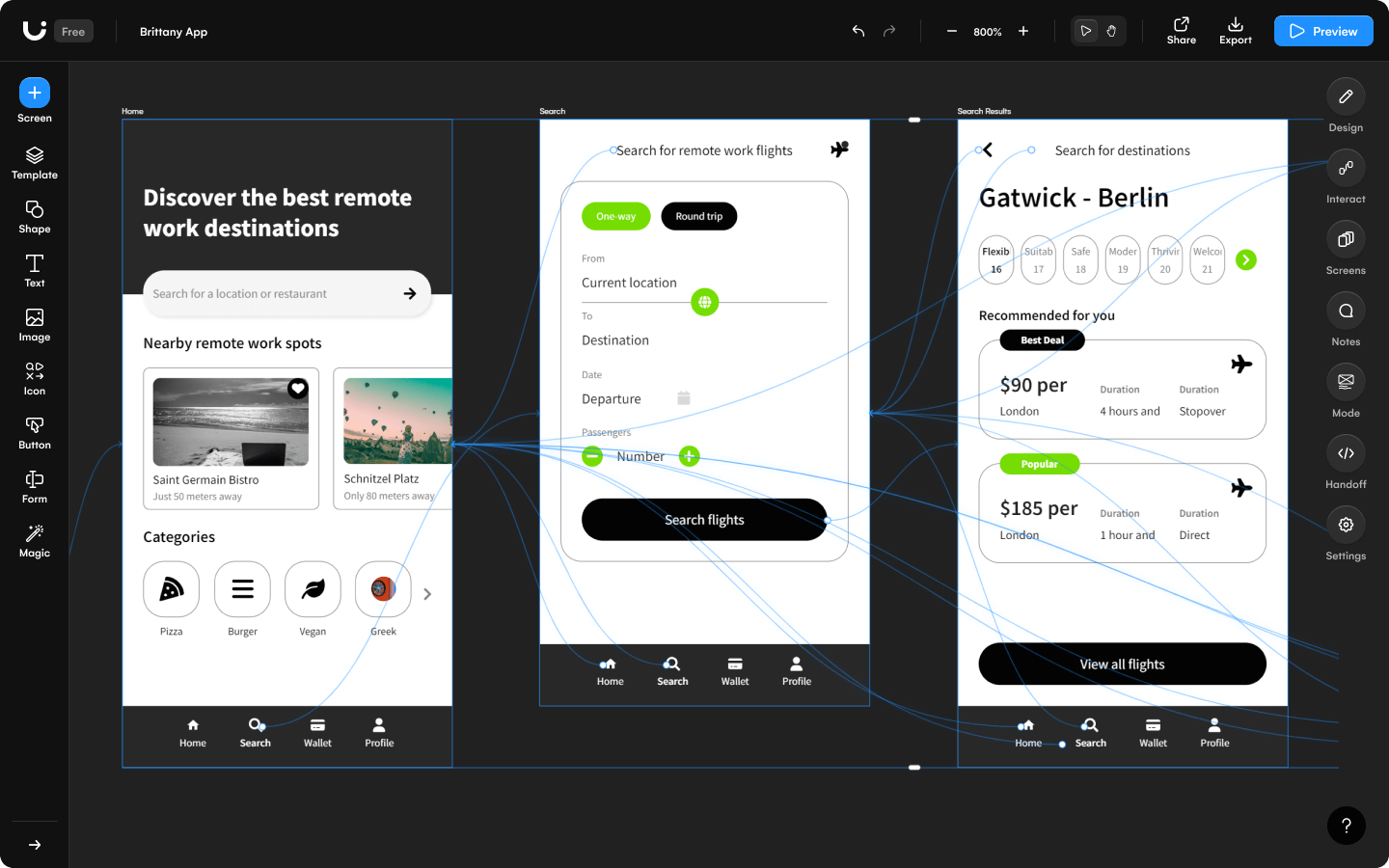

IS: Uizard is now used by over 2M users, and thousands of designs are generated daily. Our goal is twofold: to drop the barrier to entry and open up the product design process to a broader number of people and to make the product design process a lot faster for professional designers and engineers. Essentially, we aim to be the world's easiest-to-use design and ideation tool.

The two main pillars of our platform are the editor and the AI engine. A design editor with a growing collection of out-of-the-box components, from buttons and templates to lists, interactions, and more, that users find intuitive without needing prior experience or tutorials. All these are paired with AI, whose building blocks we have been developing over the years, that powers the whole product experience, accelerating the design process and making it extremely easy to use.

You can get from a text description of what you intend to design to a fully-fledged prototype in minutes — true text-to-mockup! – and obviously, you can later refine as you see fit. This is a huge time saver compared to starting from scratch on a blank canvas.

Meanwhile, users can also input individual screens (apart from simple text descriptions), and Uizard will generate new screens with variations, new themes, text, images and styles. We have seen users experimenting with queries like "Design these screens in dark mode", "Make my website look like Airbnb", etc. You can even snap a screenshot of your favourite website or a picture of something you design with pen and paper and feed that as a starting point into our platform. You bring the ideas, and AI does the rest.

We have also built a professional design AI assistant that accompanies users throughout the design process, giving tips, best practices and pitfalls to avoid.

What’s the underlying technology that powers this?

IS: In 2017, there were Deep Learning models that used to take images as input and provide textual output e.g. a description in English. We thought to apply the same technique but map what the neural network recognises to front-end code or a design representation instead of plain English. To our surprise, it worked! The neural network was able to produce this output even with a small labelled data set — what is known as supervised learning. We then moved towards semi-supervised learning, mixing a set of labelled and unlabelled input, as well as synthetic data. We created algorithms that generated hand-drawn wireframes, labelled them, and then used that data for training. Therefore, we managed to train on very big data sets and increase the accuracy of our model. We reused the building blocks of our first neural network pipeline for other tasks like converting low-fidelity to high-fidelity mockups, design system generation, etc.

Since day one, science and R&D have been our #1 priority. Thanks to our growing research team, we have developed a lot of proprietary technology specifically addressing design problems. The core is detecting what is in an image (webpage, mockup, wireframe, photo, etc. — we are pretty agnostic in the image input format) through Computer Vision. Then, we can map those elements into different formats, and the sky is the limit in what functionalities can be enabled. We could even map it to front-end code, and this is something we are currently exploring.

Uizard does not rely entirely on visuals; it is multi-modal. Recently, we started complementing our technology with LLMs and using natural language processing. This is how we built Autodesigner, where you can go from a text description to a digital artefact. Regarding LLMs, I believe we have an unfair advantage because LLMs have been trained on vast amounts of web data. A hypothesis we have is that UI interfaces, HTML, CSS and web data, in general, are somewhat native to those LLMs and better understood than other kinds of data, which plays in our favour simply because we are already within that domain.

Who are the people that benefit the most from Uizard, and what was your journey to attaining product market fit?

IS: Our target user is literally anyone who needs to produce product designs. Non-experts and experts, from founders and freelancers to marketers, business analysts, product managers, and more, can jump straight from ideation to implementation. We also notice product teams benefiting heavily from using Uizard by producing significantly more iterations of their designs and getting more user feedback in less time, eliminating design bottlenecks.

Now, product market fit is very much a moving target. It's the nature of any competitive industry; the only constant is change. Initially, our target customers were professional designers and engineers, and our entire messaging and market campaigns were focused on those personas. But we couldn't be more wrong. We figured out that because of its simplicity, Uizard was more like a toy to them back then, as they were accustomed to more complex tooling and techniques. We managed to turn this simplicity into our advantage by shifting our attention to another market: individuals and teams who have product design needs but are not necessarily professional. It took us about two years to realize that the users who benefitted the most from our technology back then were not professional designers and engineers but non-experts. A lot has changed in the meantime, both in terms of our technology and positioning, leading to some of the world’s biggest enterprises becoming Uizard customers, such as Tesla, Google, IBM, Samsung, and more.

We have been big fans of a product market fit framework that was popularised by Rahul Vohra, the CEO and founder of the email client app Superhuman. At the core of it is the idea that you send a question to your user base and ask, "How disappointed would you be if Uizard would no longer exist?". After they benchmarked against many companies and customer bases, they concluded that if more than 40% of your users are very disappointed if your product stops operating, then you have product market fit. Of course, it's not a perfect solution, but we found it to be a good guiding beacon for us and still use it occasionally.

Over the years, we went from pure hypotheses to basic analytics to implementing and running this framework. At the moment, we have introduced more advanced analytics, looking into user behaviour and trying to spot mismatches between what our users tell us they intend to do with Uizard and their actual behaviour.

What are the main technical challenges you face as an AI product that millions of people use?

IS: The main R&D challenge over the years has been the accuracy of our machine learning models. Improving the accuracy and the results we return to our users is a never-ending story. Humans are very creative, and there will always be designs and inputs that our engine has never seen before and will not know how to handle. This is why our algorithms require ongoing training, refinement, and data collection.

We started with small data sets and synthetic data, but we now have over 2 million users generating thousands of designs daily. This is an excellent data source to tap into and improve our models. However, as we enter a world with more user-generated data, there are challenges, too. A system should be able to tell the difference between user-generated and synthetic data, avoid a loop where output data is fed back into the training process, etc. Recently, there was a story for OpenAI where sensitive data got into the training data set and eventually leaked to the world. Every AI company that uses user data for training faces similar challenges. We have people building their next products and successful businesses relying on Uizard. So, it's essential for us not to tap into sensitive information. In order to do that, we employ a number of techniques to anonymously capture structural and stylistic information only, without compromising sensitive user information thus ensuring data privacy for our users.

These are parts of why we have invested a lot in R&D over the past 6+ years with a dedicated research team developing cutting-edge AI technologies specifically for design.

What do you think is the future of Generative AI and product design?

IS: We are just scratching the surface, and the democratisation of design and other creative skills is upon us. We will see many more creators jumping in with no prior experience, no need for endless tutorials and manuals, and thousands of hours spent on a specific tool to master a craft. Creative processes that used to take hours or days now take minutes or even seconds.

In particular, in product design, efficiency will shorten development cycles and make teams move faster. You could have AI generate different prototypes and run them through several users to collect feedback, aggregate them, and implement them into the next design version. All done by one or more AI agents.

Suppose everyone uses the same AI tools to generate product designs. Where do you think the novelty of design creations produced in the future will lie? Prompt engineering?

IS: Even though AI produces the design or the first version of it, it is still your input, creativity, and ideas. It might be with a text description, pen and paper, or a low or high-fidelity mockup, but in any case, you will still need to put your own ideas to work. Plus, in most scenarios, people will need to iterate on what AI generates. You won't be able to get a 100% representation of what you have in mind from the get-go. Perhaps that's my wishful thinking. I hope we don't end up in a world where everything is a copy-paste of everything else, and you just get one of the same. I believe that even in a world where AI contributes to much of what we see, designers and creators will still find ways to express themselves and produce novel and unique visual effects.

Thank you so much for taking the time, Ioanni!

IS: Thanks, Alex.

Jobs

Check out job openings here from startups hiring in Greece. The list now has 536 jobs from 98 companies.

News

10% of the top papers (selected for oral presentation) in the #1 AI conference globally based on h5-index — NeurIPS — last December were co-authored by Greek scientists. Most of them have graduated from the National Technical University of Athens.

Another field where you can find a high density of world-class Greek talent is cryptography. Greek scientists in 19% of accepted papers in one of the most prominent conferences this year (Financial Cryptography).

Open source multicloud management company Mist io announced its acquisition by Dell. (link)

Feel Therapeutics raised a new funding round from Satori Neuro, Metavallon VC, and existing investors to use objective data for mental health diagnosis, management, and care in precision medicine. (link)

New funding round raised by VR medtech NeuroVirt led by Genesis Ventures. (link)

Two Greek-founded teams already announced in the current Y Combinator cohort (W24) building in neurotechnology (Piramidal) and merchandising intelligence (BetterBasket).

Resources

We released our annual report with key highlights and aggregate figures from the investments and exits in Greek-founded startups in 2023. (link)

AI for genomics, construction robotics, and AI for drug discovery in the latest Open Coffee Athens meetup. (link)

A big startup dilemma: continue growing or look into M&A from Dimitris Glezos, founder of Transifex. (link)

Life of a Product Manager with Iosif Alvertis, VP of Product at TileDB. (link)

Optimising Blueground’s website for Google’s Core Web Vitals by Dimitris Zotos, Tech lead & Staff software engineer at Blueground. (link)

Pandelis Zembashis, Senior AI Engineer at Cosine, on edge computing, and how they evolve from CDNs and distributed compute. (link)

LLM prompting and Classical AI from Konstantine Arkoudas. (link)

Data Models: from warehouse to business impact with Tasso Argyros, founder & CEO of ActionIQ. (link)

Argyris Kaninis, co-founder of Softomotive, on the journey of building the company and getting acquired by Microsoft. (link)

Creating a Greek LLM after fine-tuning Llama2 7b by Vassilis Antonopoulos, co-founder at 11tensors. (link)

React folder structure for scalable applications by John Raptis. (link)

Events

We’re hosting “Greeks In Tech” in Zurich (Feb 1) and London (Feb 6). Join us!

“Legal Startup School” by Startup Pathways and Saplegal on Jan 17 and 18

“Kubernetes Athens vol22” by Athens Kubernetes Meetup on Jan 17

“A Node.js Odyssey” by Thessaloniki not-only Java Meetup on Jan 18

“Building High-Performance Microservices with Go” by Golang Athens on Jan 18

“UX Greece welcomes Amy Santee” by UX Greece on Jan 24

“Basketball Analytics” by Business Intelligence and Analytics Athens on Jan 25

“ZkSummit 11” on Apr 10

That’s all for this week. Tap the heart ❤️ below if you liked this piece — it helps me understand which themes you like best and what I should do more.

Find me on LinkedIn or Twitter.

Thanks for reading,

Alex

Great conversation and fantastic topic!